Learn AI

without wasting time

First things first

I call this page a portal.

You won’t find a magical collection of material that turns you into an AI expert overnight. AI is now a field of its own. To use it well, you need to study and practice.

This page is not an AI university. It is a portal that points you to carefully selected resources and websites so you can learn the fundamentals and start building things from day one.

It’s designed for people from many backgrounds (Mechanical Engineering, Chemical Engineering, Physics, Chemistry, and more) who want to use AI at a practical level for their own work.

So if AI is not your main educational or professional focus, but it is important for your studies or your job, you’re in the right place.

Frequently asked questions

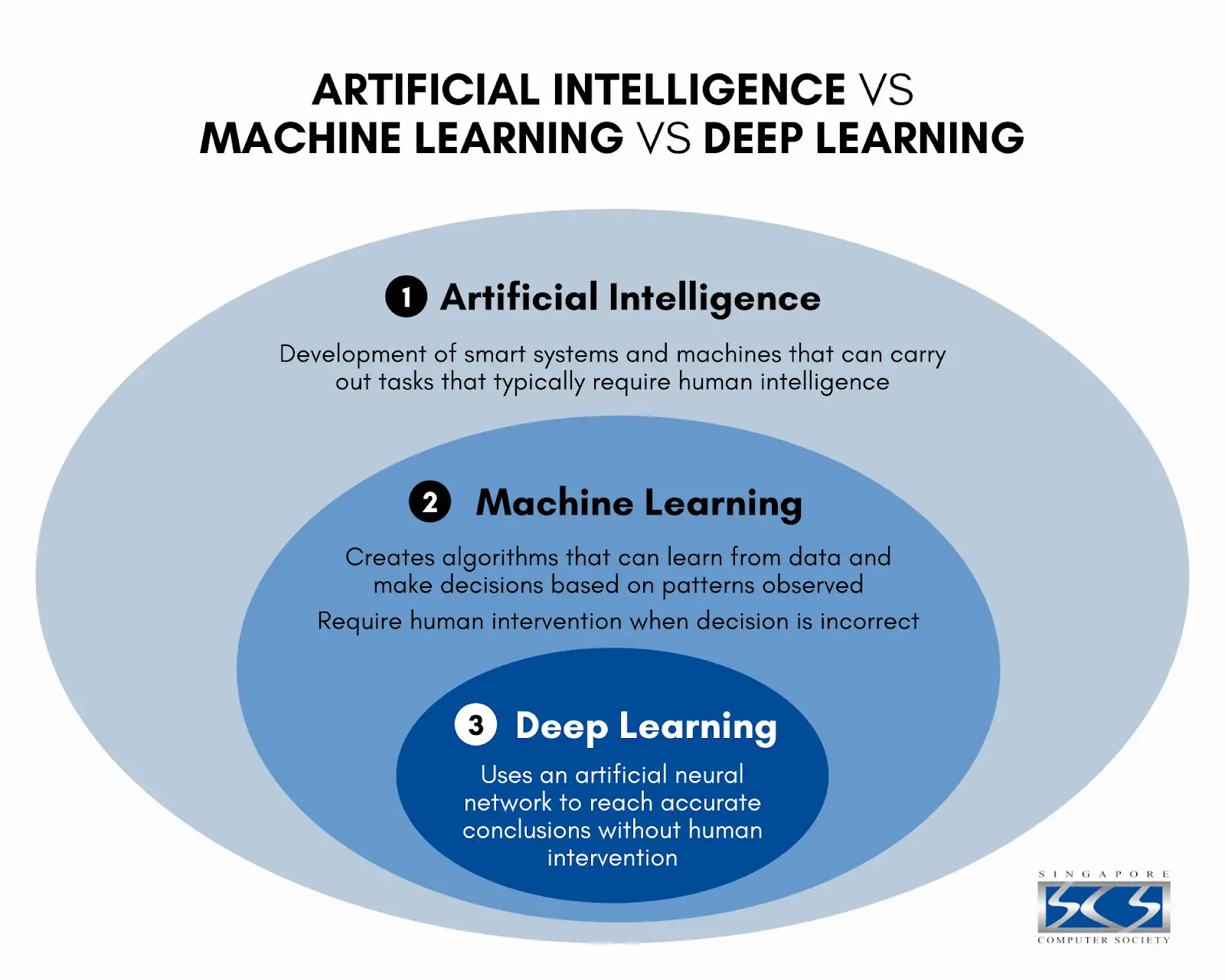

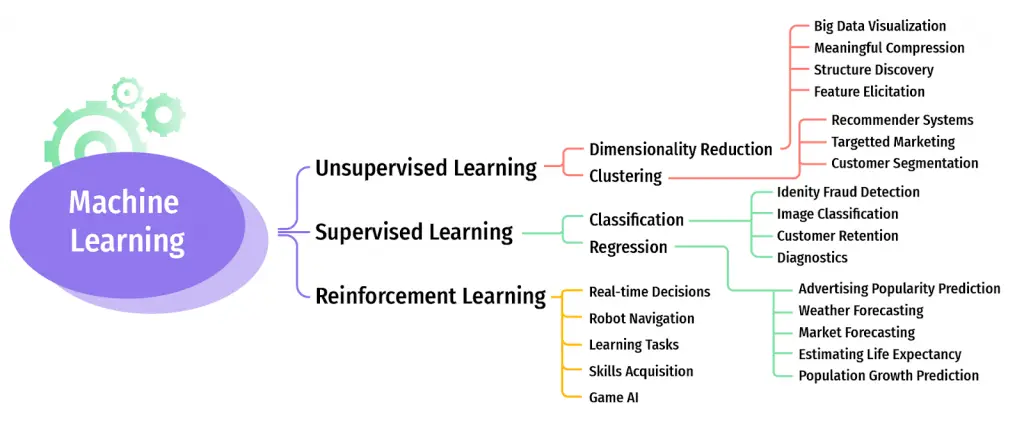

I am confused. What is AI, Machine Learning, Deep Learning, LLMs?

So what can Machine Learning do?

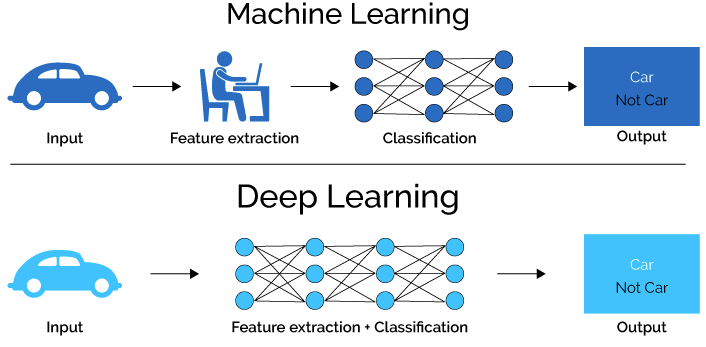

Deep Learning vs Machine Learning?

And what about GPT?

I do not know so much about Math, Statistics, Programming. What now?

Well, do not expect to understand the basic principles of most AI algorithms if you are completely unfamiliar with basic Linear Algebra, Statistics, Probability theory, and partial derivatives. I would suggest that you study these first!

Not knowing Matlab or Python is fine. You will learn it in practice.

Where do I start?

Step 1: Read the suggested slides from university courses

Step 2: Gain more insights by reading the tutorials

Step 3: Get your hands on Python

Step 4: Read a damn book!

Step 5: Read more books..

How and where to install Python

Forget what you hear about how easy Python is. It is easy AFTER you have successfully installed it on your machine and everything is working fine.

You will need a PC. If your PC can open 10 Google Chrome tabs without crashing, then it can handle light Python projects. If your datasets are images or videos, do not try it on a basic laptop. Training DL models using CPU takes a lot (weeks). Right now, a decent PC with an appropriate GPU for Deep Learning with image or video data up to some thousands, would cost you 1200-2000. And it will be slow. If you only have tabular data (excel, csv, etc.), then even a laptop will do the job (if you are patient).

Linux or Windows?

I was using Windows for 5 years at 3 different machines. I regret it. The time it took me to configure how to install Python, how to use Anaconda, how to configure Python to use my GPU would be less than it would take me to learn how to use Linux.

Linux is the way. Linux Mint, Ubuntu, etc. are just fine. Installing Python, libraries, and configuring GPU takes half an hour provided that you have a good guidance (Yes, GPT).

What about GPU?

NVIDIA GPUs built with CUDA are designed for Deep Learning. Look for one at your budget. Be prepared, they are expensive.

Frequently asked technical questions

Start with this “starter pack”:

Jupyter Notebook or Google Colab for interactive experiments.

NumPy and pandas for numerical work and data manipulation.

matplotlib for basic plots and visualizations.

scikit-learn for classical ML (regression, classification, clustering).

Deep learning frameworks can wait until these feel comfortable.

Choose one small, well-defined use case (e.g. failure prediction, signal classification, anomaly detection).

List what data you already have or could log with minimal effort.

Start with simple, interpretable models (linear models, decision trees, random forests) to get a baseline.

Focus on whether it improves your current workflow, not on being cutting-edge.

Yes, but your options change.

You can use pretrained models (for images, text, etc.) and fine-tune slightly with your small dataset.

You can generate synthetic data from simulations, physical models, or existing tools.

You can also focus on simpler models that need fewer samples and encode strong domain assumptions.

Training: learning the model parameters from data, often from scratch.

Fine-tuning: starting from a pretrained model and adapting it to your specific data/task.

Inference: using an already trained model to make predictions on new inputs.

In many practical engineering setups, you mostly do inference and occasional fine-tuning, not full training.

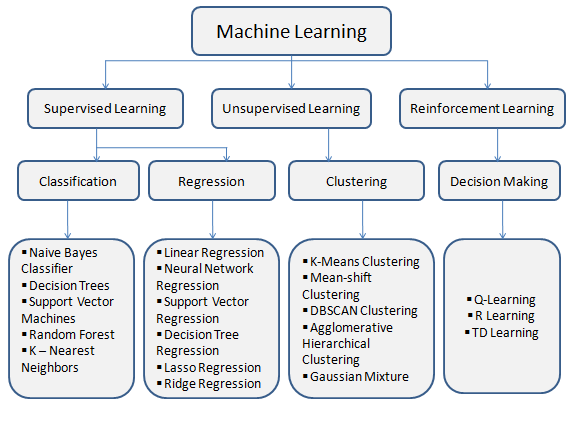

Supervised learning: you have inputs and known outputs (labels); you learn to predict the outputs.

Unsupervised learning: you only have inputs; you look for structure (clusters, embeddings).

Reinforcement learning: an agent learns by trial and error using rewards.

Most engineering applications start and live mainly in supervised learning.

Always measure performance on data the model has not seen (test/validation sets).

Pick metrics that match the real problem (MSE, MAE, accuracy, precision/recall, etc.).

Compare against a simple baseline (mean predictor, linear regression, simple rules).

Then ask: is this error acceptable in my real system (cost, risk, safety), not just mathematically small?

If the model affects safety, money, or legal decisions, yes, you should.

Prefer simpler models when possible, or add interpretability tools (feature importance, SHAP, partial dependence).

In many engineering cases, a slightly less accurate but more explainable model is the safer choice.

Aim to be able to justify model decisions to a non-ML colleague.

Probably not; think of AI/ML as a complement, not a replacement.

ML is strong where explicit models are hard (complex patterns, messy data).

Classical tools excel when physics and theory are well understood.

Hybrid approaches (simulations + ML, model-based + data-driven) are often the most powerful.

Using very complex models with tiny datasets → overfitting.

Evaluating only on training data, not on a proper test set.

Data leakage (letting test information sneak into training).

Focusing only on accuracy while ignoring constraints (latency, safety, cost).

Not tracking code/data versions, so results can’t be reproduced.

Wrap the model as a function or service that takes inputs and returns outputs.

Typical patterns: a Python script, a REST API, or a component in your existing pipeline/toolchain.

At first, a simple script reading/writing files is enough.

Later, you can add logging, monitoring, and proper deployment infrastructure.

Expect missing values, outliers, wrong labels, and weird formats—it’s normal.

Start by exploring with simple plots and summary stats.

Handle missing data explicitly (drop, impute, or encode “unknown”), and document your choices.

Often, 60–80% of the time in an ML project is data cleaning, not model training.

Tabular data (rows = samples, columns = features) is the easiest starting point.

CSV/Parquet are common; relational databases are fine too as long as you can extract tables.

For signals/images/time series, you often convert them into features or consistent tensors.

Whatever you choose, make it easy for someone else to re-load and understand your data.

Feature engineering is often more important than choosing between two fancy models.

Good features encode domain knowledge (ratios, aggregated statistics, derived indicators).

Start with simple models and invest effort in better features, then upgrade models if needed.

A simple model with good features often beats a complex model with raw data.

Use deep learning when your data is high-dimensional and structured: images, audio, text, complex time series.

For small tabular datasets, classical models (trees, linear, gradient boosting) are usually simpler and competitive.

Deep learning needs more data, more compute, and more tuning.

Don’t reach for it just because it’s fashionable—use it when it clearly fits the data type and scale.

Don’t try to understand every equation at first.

Focus on: problem setup, data used, high-level method, key results, and limitations.

Ask: does this solve a problem similar to mine, and is the complexity worth it?

If yes, look for code or implementations before trying to re-derive everything.

Use version control (e.g. Git) for code and configuration.

Fix random seeds when possible, or at least document them.

Keep track of data versions or snapshots, not just “data.csv”.

Store the environment details (library versions) or use containers so others can rerun your experiments.

When you have a clear, reliable analytic formula or a simple rule that works well.

When you have almost no data and no way to collect it.

When the problem is heavily constrained by regulation and you cannot explain or audit the model.

When the cost of errors is extremely high and you cannot tolerate uncertainty.

Slides from universities

Top university slides for ML, DL, and LLMs

MIT - Machine Learning

https://www.cs.ox.ac.uk/people/varun.kanade/teaching/ML-MT2016/slides/slides01.pdf

https://www.cs.ox.ac.uk/people/varun.kanade/teaching/ML-MT2016/slides/slides02.pdf

https://www.cs.ox.ac.uk/people/varun.kanade/teaching/ML-MT2016/slides/slides03.pdf

https://www.cs.ox.ac.uk/people/varun.kanade/teaching/ML-MT2016/slides/slides04.pdf

https://www.cs.ox.ac.uk/people/varun.kanade/teaching/ML-MT2016/slides/slides06.pdf

https://www.cs.ox.ac.uk/people/varun.kanade/teaching/ML-MT2016/slides/slides07.pdf

https://www.cs.ox.ac.uk/people/varun.kanade/teaching/ML-MT2016/slides/slides08.pdf

https://www.cs.ox.ac.uk/people/varun.kanade/teaching/ML-MT2016/slides/slides09.pdf

https://www.cs.ox.ac.uk/people/varun.kanade/teaching/ML-MT2016/slides/slides11.pdf

https://www.cs.ox.ac.uk/people/varun.kanade/teaching/ML-MT2016/slides/slides13.pdf

https://www.cs.ox.ac.uk/people/varun.kanade/teaching/ML-MT2016/slides/slides15.pdf

https://www.cs.ox.ac.uk/people/varun.kanade/teaching/ML-MT2016/slides/slides16.pdf

Stanford - Deep Learning

https://cs231n.stanford.edu/slides/2025/

Carnegie Mellon

Root directories with slides:

https://www.cs.cmu.edu/~bapoczos/Classes/ML10715_2015Fall

https://www.cs.cmu.edu/~tom/10701_sp11/lectures.shtml

https://www.cs.cmu.edu/~mgormley/courses/10423/schedule.html

https://www.andrew.cmu.edu/course/10-703

https://cs231n.stanford.edu/slides/2025/

Carnegie Mellon – 10-423/623/723 Generative AI: Transformer Language Models

Books

These are true treasures. Have them in mind when you need serious answers, because tutorials are nothing more than handbooks (and often, bad ones)

MATHEMATICS FOR MACHINE LEARNING

Every basic Math, Geometry, and Statistics knowledge you will need to deeply understand how Machine Learning works

Artificial Intelligence: A Modern Approach, Global Edition

An excellent book studying AI (not only Machine Learning). From agents to reinforcement learning, from basic classifiers to Neural Networks.

Deep Learning (Adaptive Computation and Machine Learning series)

The masterpiece for Deep Learning

Pattern Recognition and Machine Learning (Information Science and Statistics)

Bayesian view of machine learning with probabilistic graphical models, approximate inference (variational Bayes, EP), and kernel methods. A classic for understanding ML as probabilistic modeling.

Transformers for Natural Language Processing

Practitioner-oriented deep dive into the transformer architecture, BERT-like encoders, GPT-style decoders, Hugging Face ecosystem, and applications such as machine translation, summarization, and GPT-3/ChatGPT-style generation.

Foundations of Large Language Models

focuses specifically on the theory and fundamentals of pre-training, generative models, prompting, and alignment.

Top free websites

Below is a collection of websites that offer free tutorials and access to state-of-the-art AI papers. Start with Google, then explore Geeks for Geeks, then move to AI SUMMER. The rest are optional, but will blow your mind for sure.

I totally suggest reading every post on this Website, because they are to the point. The posts cover practical AI staff, like: Machine Learning, Deep Learning, CNNs, Autoencoders, Transformers, Generative Learning, Graph Neural Networks, Computer Vision and many, many more. Be careful! These tutorials assume that you are already familiar with some basic concepts. So, do not start from here.

LLM University (LLMU) by Cohere offers a comprehensive set of learning resources, expert-led courses, and step-by-step guides to help you start building AI applications. The course consists of 8 modules covering LLMs, text representation, text generation, deployments, semantic search, Prompt engineering, Retrieval-Augmented Generation (RAG), and Tool use. This course is suitable for both beginners and advanced learners, covering everything from the basics to advanced concepts in LLMs.

Personal reading suggestions

Some suggestions for reading. No hands-on, just fundamental theory

Explainable AI

Arrieta, Alejandro Barredo, et al. “Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI.” Information fusion 58 (2020): 82-115.

Longo, Luca, et al. “Explainable Artificial Intelligence (XAI) 2.0: A manifesto of open challenges and interdisciplinary research directions.” Information Fusion 106 (2024): 102301.

Fuzzy Cognitive Maps

Felix, G., Nápoles, G., Falcon, R., Froelich, W., Vanhoof, K., & Bello, R. (2019). A review on methods and software for fuzzy cognitive maps. Artificial intelligence review, 52(3), 1707-1737.

Papageorgiou, Elpiniki I., and Chrysostomos D. Stylios. “Fuzzy cognitive maps.” Handbook of granular computing 123 (2008): 755-775.